PySpark — Fix Column Header with Spaces

We often run into a situation where the Source files have header but with spaces in column names. And moving the data to different systems becomes challenging due to these limitations of spaces in column names in other systems.

First Name => first_name

We will run through a small code for fixing the column names dynamically. There will be no need to do any manual effort.

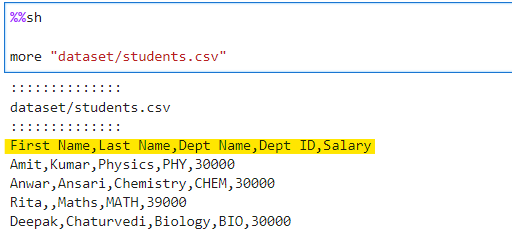

Lets jump into the example. We will start from checking the data for our example file.

%%sh

more "dataset/students.csv"

Python code to fix the header and generate the list of fixed headers. We would replace the spaces with underscore “-”

# Python function to read the column name and fix the space with underscore "_"

from pyspark.sql import DataFrame

def fix_header(df: DataFrame) -> list:

fixed_col_list: list = []

for col in df.columns:

fixed_col_list.append(f"`{str(col).strip()}` as {str(col).strip().replace(' ','_').lower()}")

return fixed_col_list

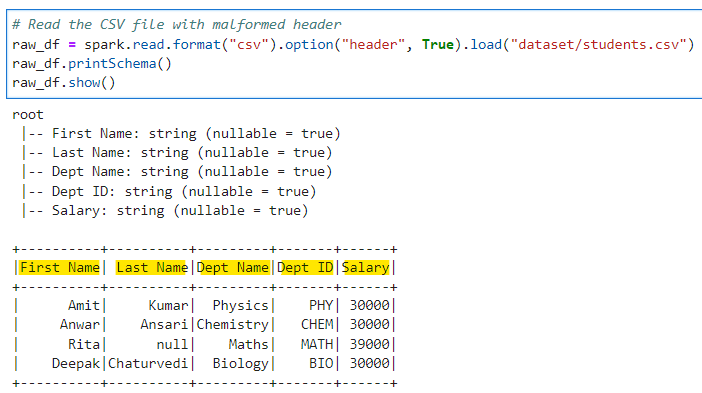

Now, lets read the CSV file to generate the raw DataFrame

# Read the CSV file with malformed header

raw_df = spark.read.format("csv").option("header", True).load("dataset/students.csv")

raw_df.printSchema()

raw_df.show()

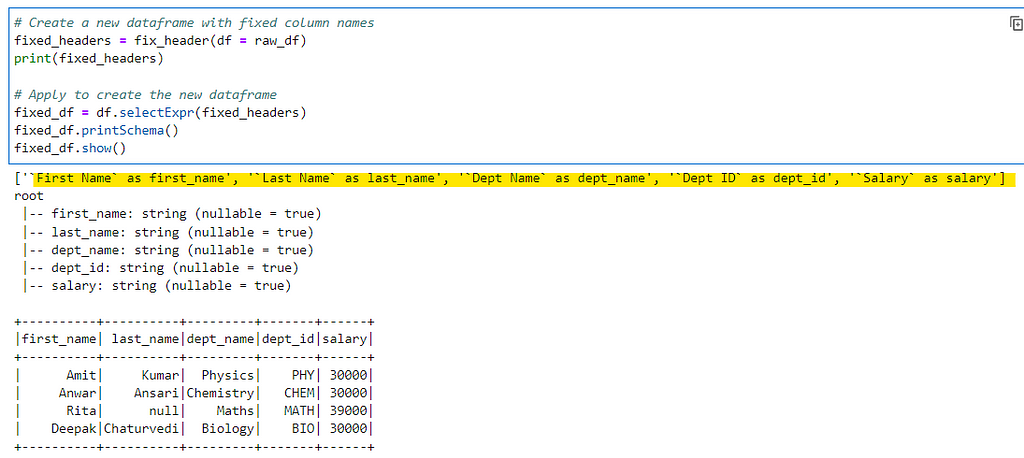

Run the Raw Data Frame though the Python code to get the header list and fix the headers in the Data Frame

# Create a new dataframe with fixed column names

fixed_headers = fix_header(df = raw_df)

print(fixed_headers)

# Apply to create the new dataframe

fixed_df = df.selectExpr(fixed_headers)

fixed_df.printSchema()

fixed_df.show()

This code will work with all data sources until you can read the data in Spark Data Frame and pass it through the Python Code.

Checkout the iPython Notebook on Github — https://github.com/subhamkharwal/ease-with-apache-spark/blob/master/23_Fix_Headers.ipynb

Checkout my Personal blog — https://urlit.me/blog/

Checkout the PySpark Medium Series — https://subhamkharwal.medium.com/learnbigdata101-spark-series-940160ff4d30

Top Five

Following are the top five articles as per views. Don't forget check them out:

Buy me a Coffee

If you like my content and wish to buy me a COFFEE. Click the link below or Scan the QR.

Buy Subham a Coffee

*All Payments are secured through Stripe.

About the Author

Subham is working as Senior Data Engineer at a Data Analytics and Artificial Intelligence multinational organization.

Checkout portfolio: Subham Khandelwal