PySpark — Create Spark Datatype Schema from String

Are you also tired manually writing the schema for a Data Frame in Spark SQL types such as IntegerType, StringType, StructType etc. ?

Then this is for you…

PySpark has an inbuilt method to do the task in-hand : _parse_datatype_string .

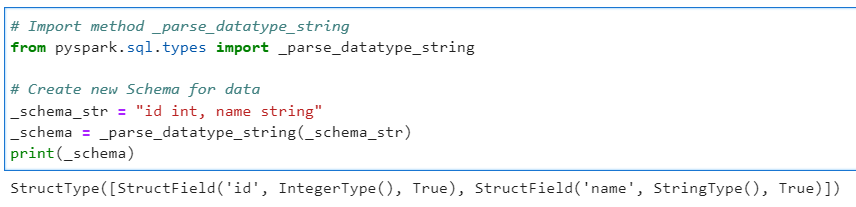

# Import method _parse_datatype_string

from pyspark.sql.types import _parse_datatype_string

# Create new Schema for data

_schema_str = "id int, name string"

_schema = _parse_datatype_string(_schema_str)

print(_schema)

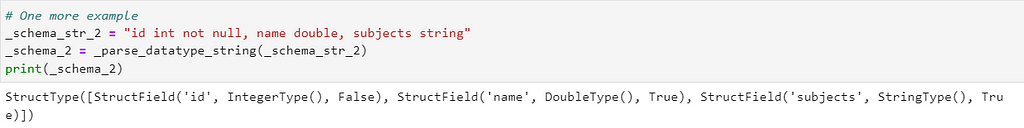

# One more example with not null column

_schema_str_2 = "id int not null, name double, subjects string"

_schema_2 = _parse_datatype_string(_schema_str_2)

print(_schema_2)

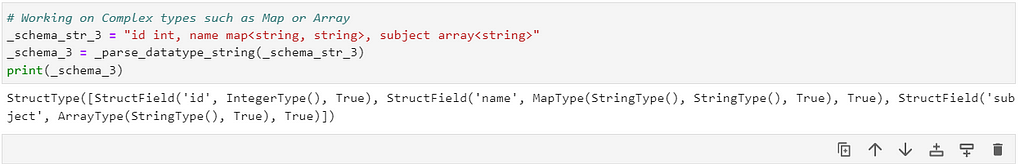

We can also convert the complex datatypes such as Map or Array

# Working on Complex types such as Map or Array

_schema_str_3 = "id int, name map<string, string>, subject array<string>"

_schema_3 = _parse_datatype_string(_schema_str_2)

print(_schema_3)

Check out iPython notebook on GitHub — https://github.com/subhamkharwal/ease-with-apache-spark/blob/master/2_create_schema_from_string.ipynb

Top Five

Following are the top five articles as per views. Don't forget check them out:

Buy me a Coffee

If you like my content and wish to buy me a COFFEE. Click the link below or Scan the QR.

Buy Subham a Coffee

*All Payments are secured through Stripe.

About the Author

Subham is working as Senior Data Engineer at a Data Analytics and Artificial Intelligence multinational organization.

Checkout portfolio: Subham Khandelwal